Table of contents

Toggle TOC

Background: why ‘safe broadcast’ is required

On Chinese platforms like Douyin, Kuaishou and Taobao Live, streams are reviewed by both AI and human moderators. A single slip can get a room suspended instantly. To mitigate risk, most teams keep a delay of at least 20 seconds so operators have time to DUMP/MUTE. Top esports broadcasts sometimes go as high as 2 minutes. Viewers may complain about latency, but for businesses, giving the last‑mile moderator enough time to react is far more important than shaving a few seconds off the delay.

A costly failure: the 20-second test

I didn’t have a dedicated delay unit, but still wanted a 20‑second delay in OBS. How hard could it be?

First attempt: assuming a filter could do it all

The plan was to use OBS’s built‑in Video Delay (Async) filter:

Filter → Video Delay (Async)

Delay → 20000 ms

Important: the Video Delay (Async) filter delays video frames only. Audio is still sent with real‑time timestamps.

So how should we delay the audio?

How I tried to delay the audio

Here’s what I tried:

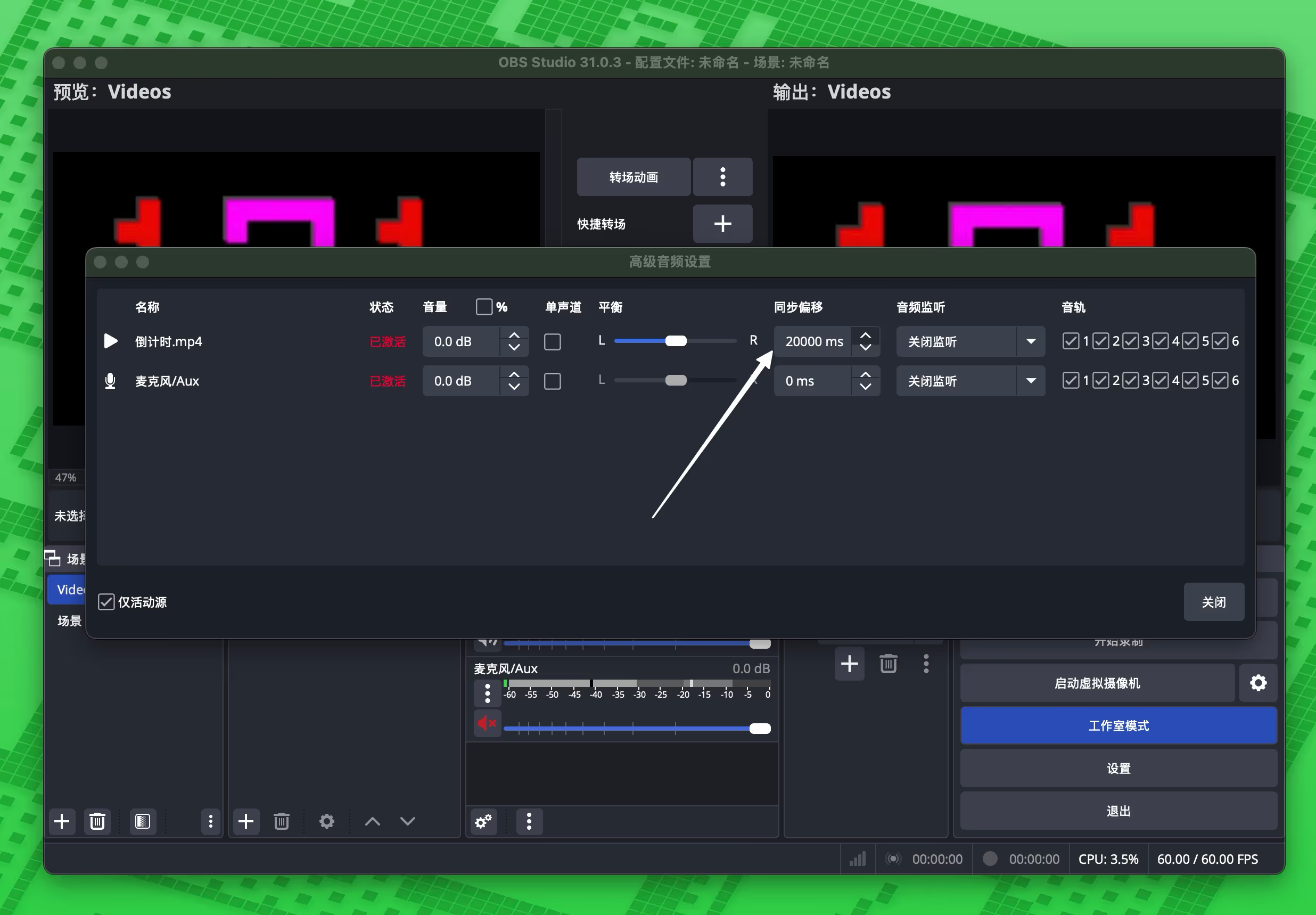

- In Mixer → Advanced Audio Properties, set the Sync Offset of all outbound audio sources to

20000 msto match the video delay. - In Audio Monitoring, enable Monitor and Output so local monitoring stays real‑time while the outbound stream is 20 seconds late (matching video).

However, once pushed upstream, the live room soon turned into a disaster: intermittent crackling and unusable audio on the platform side.

Root cause: where the distortion comes from

This puzzled me, so I combed through GitHub issues and the OBS forum. Here’s what I found:

1) Video Delay (Async) is not designed for ‘safe broadcast’1 — its real purpose is correcting lip‑sync differences on the order of 100–200 ms. It was never designed for tens‑of‑seconds broadcast delay — even if you can type a big number in the UI.

2) OBS audio buffering has a hard cap at ~960 ms2 — in OBS’s code, the maximum audio buffering (max buffering) is effectively limited to about 960 ms. No matter what you type in the filter, audio won’t delay beyond ~1 second.

3) Mixers/filters can’t ‘fill’ a 20‑second gap — After noticing video was behind, I tried forcing 20000 ms in Advanced Audio Properties → Sync Offset and delaying monitoring on the mic channel. Still failed, for two reasons:

- The 960 ms ceiling still applies — Sync Offset relies on the same buffer and gets clipped.

- Mixers don’t rewrite timestamps — even if monitoring sounds “in sync”, RTMP packets keep original timestamps. The server still sees “audio early, video late” and starts resampling/dropping.

In short, what you hear locally is not what the audience hears. Mixer sliders and ‘sync offset’ filters are for sub‑second lip‑sync tweaks — not 20‑second broadcast delays.

With 20000 ms set:

- Video really was 20 seconds late.

- Audio ignored the offset due to the 960 ms cap and remained close to real time.

That ~20‑second timestamp gap forced the RTMP server (YouTube/Bilibili) to “fix” sync by aggressive resampling/dropping on the audio track — the source of the crackling.

I validated this repeatedly on YouTube: once Video Delay exceeds ~10 seconds, crackling/distortion almost always appears for viewers.

Lesson learned: fix the layer, not the knob

My mistake was treating render‑layer knobs as if they were transport‑layer buffers. Proper broadcast delay must happen at the stream/packaging layer (RTMP/SRT), where audio and video packets are delayed together.

Engineering takeaways

- Layering matters — a small UI slider can hide a very different abstraction underneath. Delay belongs to the transport layer; forcing it in the render layer yields a fragile illusion.

- End‑to‑end view — local monitoring “feels fine” is not the same as CDN ingest being logically in sync. Always inspect the final output stream.

A pragmatic dual‑OBS ‘safe broadcast’ architecture

If you don’t have a hardware delay box, architecture can still save you:

- Technique: keep the delay upstream and monitor locally; your broadcast box consumes an already‑delayed stream.

- Operations: bind

DUMP/MUTEto a Stream Deck (or similar) to give operators a 5–10‑second reaction window.

Concrete setup

The core idea: the upstream delay box handles buffering and emergency actions; the broadcast box just listens and forwards.

-

Delay / upstream box

- Use OBS’s Broadcast Delay, vMix, or a dedicated delay unit on this box.

- Buffer ~20 seconds, and map hotkeys for

DUMP(drop frames) /MUTE(silence) in emergencies.

-

Main broadcast box (your primary OBS)

- Listen to the delayed stream via RTMP or SRT.

- Do not use any delay filters here.

- Push to platforms as usual.

This way audio and video arrive already in sync, and the broadcast box does not need to buffer 20 seconds of frames — eliminating the root cause of crackling.

Minimal OBS‑only setup: no plugins, make OBS the server

Option 1: RTMP — Media Source + listen=13

This is the most compatible approach.

RTMP receiver (broadcast OBS)

-

Add a new “Media Source” to your scene.

-

Uncheck “Local File”.

-

Enter the following in the “Input” field:

rtmp://0.0.0.0:1935/live/app -

In the “FFmpeg Options”, enter a key parameter:

listen=1After this step, your broadcast machine will be “listening” on port 1935, waiting for the streaming machine to connect.

RTMP sender (delay OBS)

- Open

Settings→Stream. - Select “Custom” for service.

- Enter server address

rtmp://<broadcast-machine-IP>:1935/live/app. - Stream key can be left blank or filled arbitrarily.

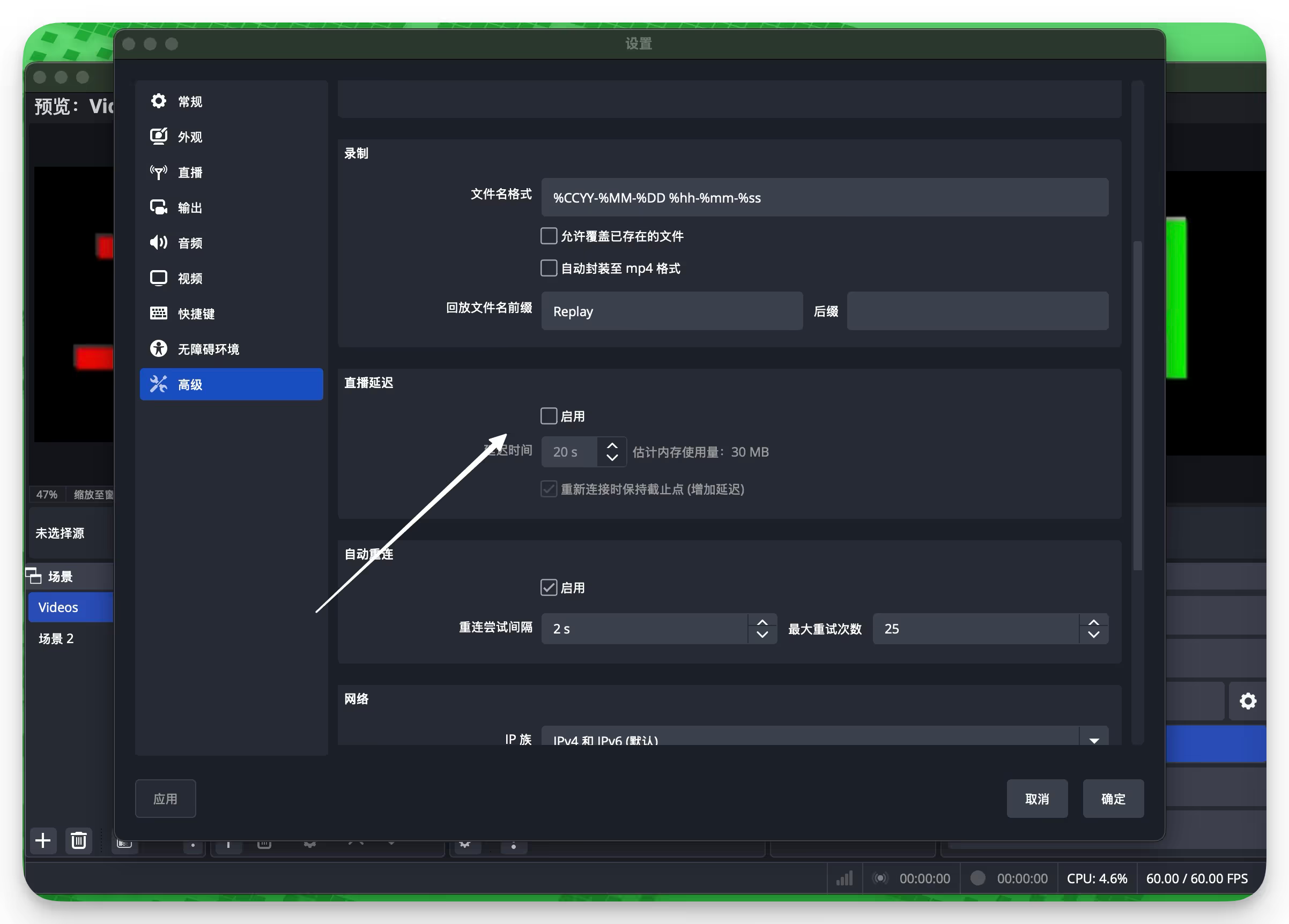

Then in OBS Settings → Advanced → Stream Delay, enable delay and set it to 20 seconds4

Click “Start Streaming”, and the delayed feed will appear steadily in your broadcast machine’s media source.

Option 2: SRT — built into OBS 25+, lower latency

Since OBS 25.0, SRT is built‑in. Setup is simple, and its UDP nature usually performs better than TCP‑based RTMP on shaky networks.

SRT receiver (broadcast OBS)5

Media Source → uncheck Local File

Input = srt://0.0.0.0:6000?mode=listenerSRT sender (delay OBS)

Settings → Stream → Custom

Server = srt://<broadcast-box IP>:6000?mode=callerEnable Stream Delay in Settings → Advanced and set 20 s.

Click Start Streaming; the picture arrives with lower end‑to‑end latency than RTMP.

Diagram (dual‑OBS over RTMP)

FAQ

Q: Can the OBS‑NDI plugin achieve the same effect?

A: No. NDI is built for real‑time, low‑latency transport. Using it for long LAN delays is the wrong tool for the job.

Extra notes

High-risk words (examples)

- Names/titles of state leaders

- Sensitive political events/slogans

- Minority languages (e.g., Tibetan) — current ASR often misclassifies and flags them

High-risk visuals

- Foreign nationals on camera

- Minors on camera

- National/party flags or emblems

Touching any of the above can get a live room suspended instantly. Hence safe broadcast (delay + active monitoring for DUMP/MUTE) is effectively mandatory for commercial streaming in China.

Footnotes

Footnotes

-

MAX_BUFFERING_TICKS — Artificial Limit to Audio Sync Offset? | OBS 960 ms Audio Buffer Cap Discussion ↩

-

OBS Forum Discussion “help with media source and rtmp” - Setting

listen=1in media source makes OBS act as RTMP server ↩ -

OBS Forum Post “Adding a timed delay to my stream” - How to enable Broadcast/Stream Delay in Settings → Broadcast/Advanced ↩

-

OBS Official Knowledge Base “SRT Protocol Streaming Guide” - Usage and meaning of

mode=listener/mode=callerparameters in URLs ↩